Practical tips on how to approach your prep including understanding the role, links, and useful sample questions

This article has some great tips for people interested in pursuing a career as a computer vision engineer at Mujin. It provides valuable insights that can help job seekers prepare for interviews in this field – and our open positions.

While this blog describes various skills and aspects of the interview situation, it doesn’t cover basic computer science knowledge, or even the basics of computer vision – but you can find useful links below to review. Furthermore, this guide covers computer vision as in helping computers understand a real 3D scene, not just image processing.

What job are you interviewing to get?

Let’s get started with our first, and most important tip: make sure you understand the job. You need to know the organization, the team structure, culture, your potential teammates, and prepare to prove you have the skills needed to do the job.

Computer vision requires basic skills, usually acquired by working with these concepts and tools for more than a few months:

- Mathematics knowledge, as computer vision is based on physical things

- Programming skills, data structures, and other aspects of computer science

- Learning skills, because we always need new skills to solve new challenges

To hone your computer vision skills, it is essential to prepare specifically for the tasks required. The techniques used can vary depending on the application, so it is crucial to be well-versed in the relevant methods for each task.

Here’s a list of different subjects for computer vision:

Self-driving cars, Autonomous Guided Vehicles (AGVs) and other types of mobile robots on wheels

The usage of computer vision in obstacle and people avoidance is prevalent in various technologies. When creating an algorithm to detect people, it is assumed that the sensors and cameras are aligned with gravity, which provides more opportunities to develop an efficient detection algorithm. This eliminates the need to account for all possible rotations of individuals walking on two feet, be it on the streets or in a warehouse.

Drones, satellites, or other things that can utilize an image taken from a tall height

Due to the two-dimensional representation of objects in images, localization and navigation become simpler. To achieve fast and low-resolution recognition, images captured by the cameras must be optimized. You can narrow down the types of algorithms you’ll utilize based on this assumption.

Augmented Reality (AR)

AR is a technology that blends virtual reality with real-life by utilizing sensors and cameras to modify views and scenes. For instance, in a game played on a table, sensors (cameras) would be necessary to detect the environment and integrate virtual reality animations into the experience. Complex work in computer vision is typically required for AR jobs, with object detection, SLAM, and target pose estimation being the most common tasks in developing AR applications. This is because reconstructing scenes involves mapping (similar to SLAM) while scanning geometry and appearance entails representing objects.

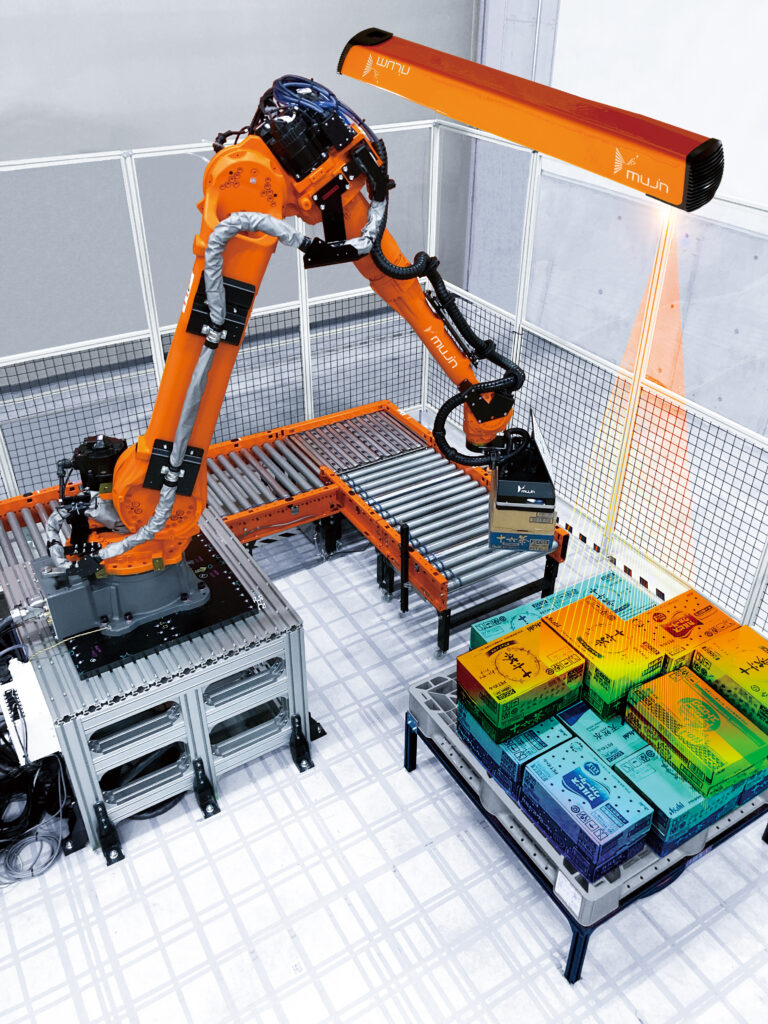

Picking robots, or most robots that manipulate objects

From the viewpoint of the sensors, objects to be manipulated are positioned with no relation to gravity. Inter-reflection is another challenge; shiny objects will be taking on colors, highlights, and reflections from other nearby objects. If the environment is cluttered, you’ll also need to worry about occlusions. All these can pose challenges in segmenting and detecting poses in scenes.

As you can understand through these examples, depending on the nature of the system and the tasks to be solved, your computer vision experience will be valued differently. Therefore, our first suggestion is to tailor your skills interview strategy depending on the job you pursue. For example, if you’re going to be making a robotic system that picks up different objects, be sure to study the ins-and-outs of pose estimation including rotations, low-lighting conditions, and other practical considerations. Ultimately, you’ll have a higher chance of success if you understand the job as much as possible and are prepared to speak about your relevant skills and experience.

How to prepare for the technical interview at Mujin

The skills-based interview is one of the initial steps in our selection process. Most interviewers want to know your applied knowledge of basic skills. Our skill check, much like others in the industry, includes a programming test and questions about algorithms and data structures. We also test your understanding of computer vision basics. You’ll need to demonstrate you know what to achieve and how to achieve it, and that you have the programming skills to implement successfully. As an engineer it’s important to make things work and find a solution. Typical problems we solve here are:

- 3D pose estimation

- Object detection & classification

- SLAM

- Calibration

- Writing drivers to get sensor data, data capture, data stream, and reconstruction

- Object and scene reconstruction

- Evaluating sensor capabilities in real environments

Programming — what to expect

Generally, computer vision roles will involve using C++ and Python. You should be prepared to discuss your related skillful experience in the interview. The combination of these two languages is popular as both can be used in production by calling each other, thus quickly integrated in an R&D environment and later optimized for performance. You should be ready to show your portfolio or samples of the code you’ve written.

Computer vision-related interview questions

As you know, computer vision is a complex subject in computer science because of interrelated topics that converge in math, physics, and electronics. A few questions can go deep to understand a person’s knowledge on these subjects.

A few key questions that you may encounter:

Q: How do you project a 3D point to an image?

There is a trick to this question, and you’ll need to ask the follow-up question “What coordinate frame is the point represented?” You’ll want to know if this is the camera frame or if the point is projected into the image frame. To transform the point to another frame, you need to know the rigid transformation. To project the image, you need to know camera intrinsics such as the camera matrix and lens distortion.

Q: How do you make 3D measurements using 2D cameras/sensors?

Here you will need to demonstrate an understanding of epipolar geometry and essential matrix, in addition to the relationship between 2D and 3D points. You’ll need to know the basics that allow you to make measurements in a scene, and after understanding these parts of an image you can then start to use the sensor as a measurement device.

You can prepare for these types of questions by refreshing your knowledge of camera calibration/ representation, calibration, epipolar geometry, and PnP-based pose estimation, and homography/ transformations.

Q: What is the object, and what is its position & orientation based on the coordinate frame of a reference?

Advanced computer vision roles are not about detecting bounding boxes. As 3D pose estimation is about 3D translation and rotation of objects, it’s important to demonstrate that you can generate a rotation matrix. Geometry and appearance can define the origin of an object, and for any advanced role you will be expected to show your understanding of techniques using these formulas as well. Here’s a link for feature extraction + feature matching + homography-based pose estimation.

Recommended resources for math review

In any computer vision interview you will likely need to demonstrate your applied knowledge of math such as:

- Linear Algebra

- Calculus

- Numerical optimization

- Probability

- Geometry

For mathematical optimization, Professors Stephen Boyd and Lieven Vandenberghe, wrote a very useful book titled Convex Optimization, which is available freely on the web.

Recommended resources for computer vision review

There are a many computer vision materials online. One that stands out is taught by Srinivasa Narasimhan at Carnegie Mellon University, and is called “Computer Vision.” The course provides a comprehensive introduction to image processing, the physics of image formation, the geometry of computer vision, and methods for detection and classification.

Other useful resources:

3D Computer Vision from Guide Gerig at the University of Utah

Computer Vision courses at the University of Florida

Lecture series on 3D sensors from Radu Horaud at INRIA

Our final advice: if you know these things above, but not well, you should try to learn. Computer vision is an exciting area, and many technologies will rely on it heavily. Thanks for reading and we wish you the best on your journey to building an exciting automated future.

If you are interested in being a part of the Computer Vision team, please apply directly through our career website.